I’ve been using the OpenAI API for several weeks, and I’ve been thinking about how smart ChatGPT is and how it can understand my prompts even when there are missing words from the text.

That’s when the idea struck me: ‘What if I could reduce the words used in my prompts through the API to save money on token usage?’

That’s exactly what I’m going to demonstrate in this post, and you’ll see that it’s a straightforward process. I’ll divide the content into two sections: one for those who want to learn the code and how I accomplished it, and the other with a finished app where you can simply enter the text you desire.

How the prompt reducer works

The first thing you need to know is that our prompt is going to pass in two cleaning phases:

- First, we will remove the stop words from the text. Stop words refer to a collection of frequently employed words within a given language. For instance, in the English language, words like “the,” “is,” and “and” are prime examples of stop words

- Then, we will lemmatize all the verbs. The lemmatizer is a process that reduces words to their base or root form, which helps in simplifying and standardizing verb forms and makes it easier for language models like GPT to understand and generate text.

So, let’s take an example with this description of the game Baldur’s Gate 3, taken from Wikipedia:

“Baldur’s Gate 3 is a role-playing video game with a single-player and cooperative multiplayer element. Players can create one or more characters and form a party along with a number of pre-generated characters to explore the game’s story”

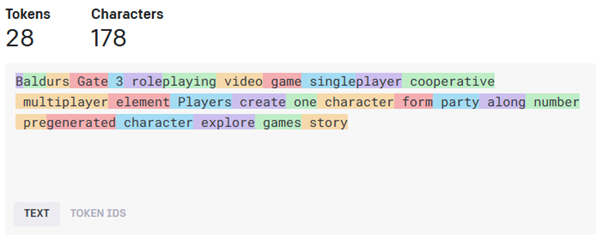

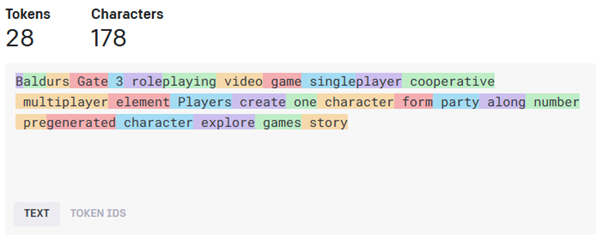

If we were to put this in the Tokenizer by OpenAI, it tells us we would spend 49 tokens:

After our prompt reducer, the text we get is:

“Baldurs Gate 3 roleplaying video game singleplayer cooperative multiplayer element Players create one character form party along number pregenerated character explore games story”

We went from 49 to 28 tokens, that is a 42.86% reduction in token usage.

Possible Use Cases: Why Would You Want to Reduce Token Usage?

If you’ve already used the OpenAI API, you probably know that it charges based on the number of tokens, including both your inputs and the responses from OpenAI. While using a model like GPT-3.5 may not be too expensive, using GPT-4 with extensive data can become costly.

In my case, I’ve developed an app that retrieves product descriptions from an .xlsx file and rewrites them. For every product on a website, I send a product description to GPT-4 and receive the rewritten product description in return. If you, like me, are sending a substantial amount of content to the API, reducing tokens might be a good idea.

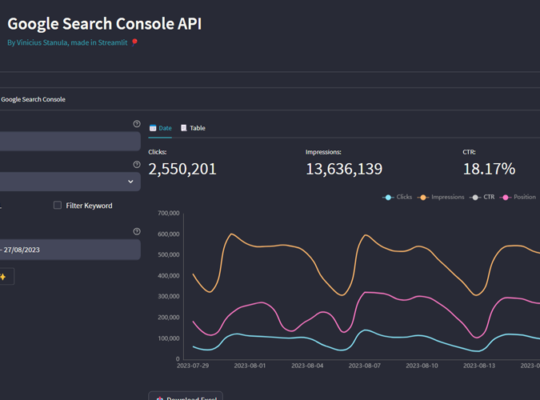

I’ve also created a script that takes descriptions from web pages and generates SEO-optimized meta titles and meta descriptions. This script uses the same text cleaning method that I’m demonstrating here.

How the code in OpenAI Prompt Reducer works

Section 1: Understanding the Code

The code is pretty straightforward, and besides importing the modules, it revolves around 12 lines of code.

First, you begin by importing the modules you need, and that is:

import nltk # Natural Language Toolkit for text processing from nltk.corpus import stopwords # Stopwords dataset for filtering common words from nltk.stem import WordNetLemmatizer # Lemmatization for word normalization import string # Provides a collection of punctuation characters

Then, you download some resources that you will need, and you only need to run this part once. After downloading it, you can exclude those lines:

# Download required NLTK resources

nltk.download('stopwords') # Download stopwords dataset

nltk.download('punkt') # Download punkt tokenizer

nltk.download('wordnet') # Download WordNet dataset for lemmatization

Next, you insert your entire function to process the text:

# Function for text preprocessing

def preprocess_text(text):

# Create a lemmatizer object for English

lemmatizer = WordNetLemmatizer()

# Load the stopwords dataset for English

stop_words = set(stopwords.words('english'))

# Split the input text into words (tokenization)

words = text.split()

# Apply lemmatization, remove stopwords, strip whitespace, and remove special characters

filtered_words = [lemmatizer.lemmatize(word) for word in words if word.lower() not in stop_words and word.strip() != '']

# Remove single quotes, hyphens, and punctuation marks

filtered_words = [word.replace("'", "").replace("-", "") for word in filtered_words]

filtered_words = [''.join(char for char in word if char not in string.punctuation) for word in filtered_words]

# Reconstruct the processed text from the filtered words

processed_text = ' '.join(filtered_words)

return processed_text

Next, you’ll need to add just three more lines of code to input your text and receive the formatted output:

# Process text text = "Your text here" processed_text = preprocess_text(text) print(processed_text)

Here is the full code on github: https://github.com/ViniciusStanula/gpt-prompt-reducer

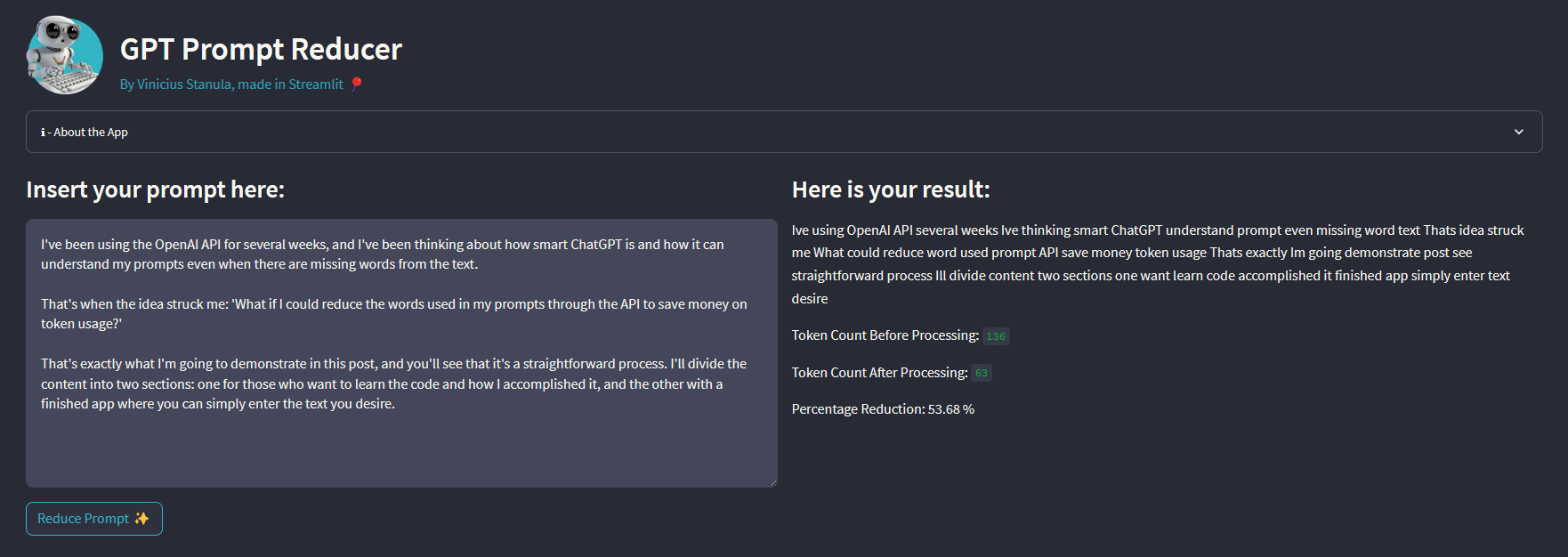

Section 2: Using the Finished App

If you don’t know how to code in Python and are just curious to see how this prompt reduction works, I’ve created a simple web application for you to use:

- Simply go to the web app at https://gpt-prompt-reducer.streamlit.app/,

- Insert your text or prompt,

- Click the button,

- And get your results.

So, that is basically it. I hope you liked the content, and I would be happy to see your applications and to receive any feedback that you have.